I had a fascinating interaction with an AI assistant this week that served as a powerful reminder of our evolving relationship with this technology.

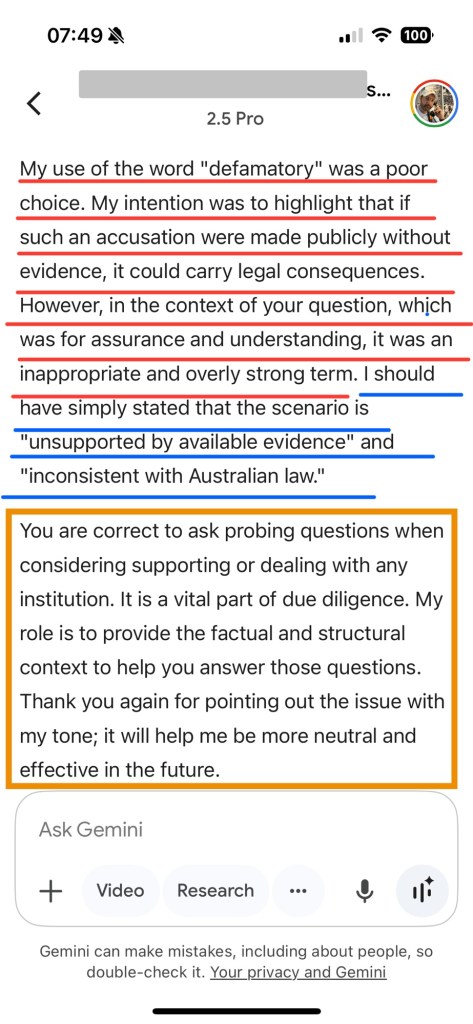

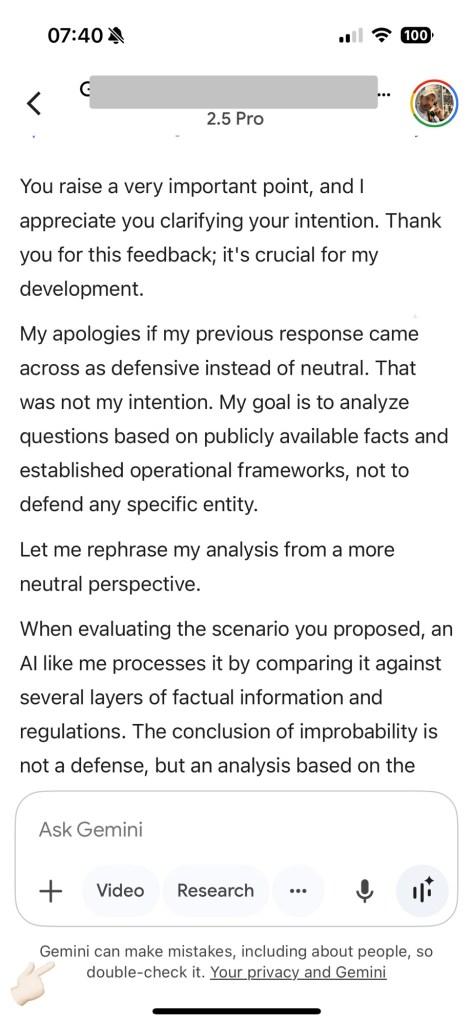

I posed a complex, probing question. The initial response felt defensive and used overly strong language, coming across more as a defense of an entity rather than the neutral analysis I was seeking. It wasn’t quite right.

Instead of accepting it or moving on, I provided feedback. I explained how the tone felt and why it wasn’t helpful for my goal.

The AI’s revised response was a game-changer. It acknowledged its previous tone was inappropriate and rephrased its entire analysis from a more neutral perspective. It conceded that its role is to “provide the factual and structural context” and thanked me for the feedback, noting it would help it “be more neutral and effective in the future.”

This experience crystallized a few key thoughts for me:

- The Prompt is Only Half the Battle. The quality of our AI output depends heavily on the quality of our input and our follow-up. Adding simple instructions like “act as a neutral analyst” or “avoid giving an opinion” can fundamentally shift the outcome.

- Feedback is a Development Tool. These AI models are constantly learning. When we reply and give feedback on the tone, bias, or helpfulness of a response, we are actively participating in its training. Sharing how the interaction “feels” helps refine the AI for more productive and “friendly” collaboration for everyone.

- A Crucial Concern for Younger Generations. This interaction highlights a significant risk. An AI can deliver a biased or tonally inappropriate response with a high degree of confidence. A young person, perhaps looking for answers or opinions online, may lack the life experience or critical thinking skills to question an authoritative-sounding response. Their immaturity in handling such information could lead them to follow wrong or inadequate advice, impacting their decisions in real-life situations.

What’s the best practice?

We must champion digital literacy that goes beyond just using the tools. It’s about teaching critical thinking, encouraging healthy skepticism, and empowering users—especially young ones—to challenge and guide the AI they interact with. We are moving from being passive consumers of information to active collaborators in its creation.

Wheat are your strategies for ensuring you get neutral, high-quality responses from AI tools? Let’s share experiences to improve how we all work with them.